Is Your AI Strategy Outpacing Governance?

Enterprises are adopting AI at breakneck speed—from ChatGPT in marketing to GitHub Copilot in development. But many still rely on pre-AI governance structures.

According to McKinsey’s 2024 Global AI Survey, 79% of organizations report AI adoption in at least one business unit, but only 21% have established policies for AI risk management.

This disconnect creates an AI audit gap—leaving organizations exposed to emerging threats, compliance failures, and reputational damage.

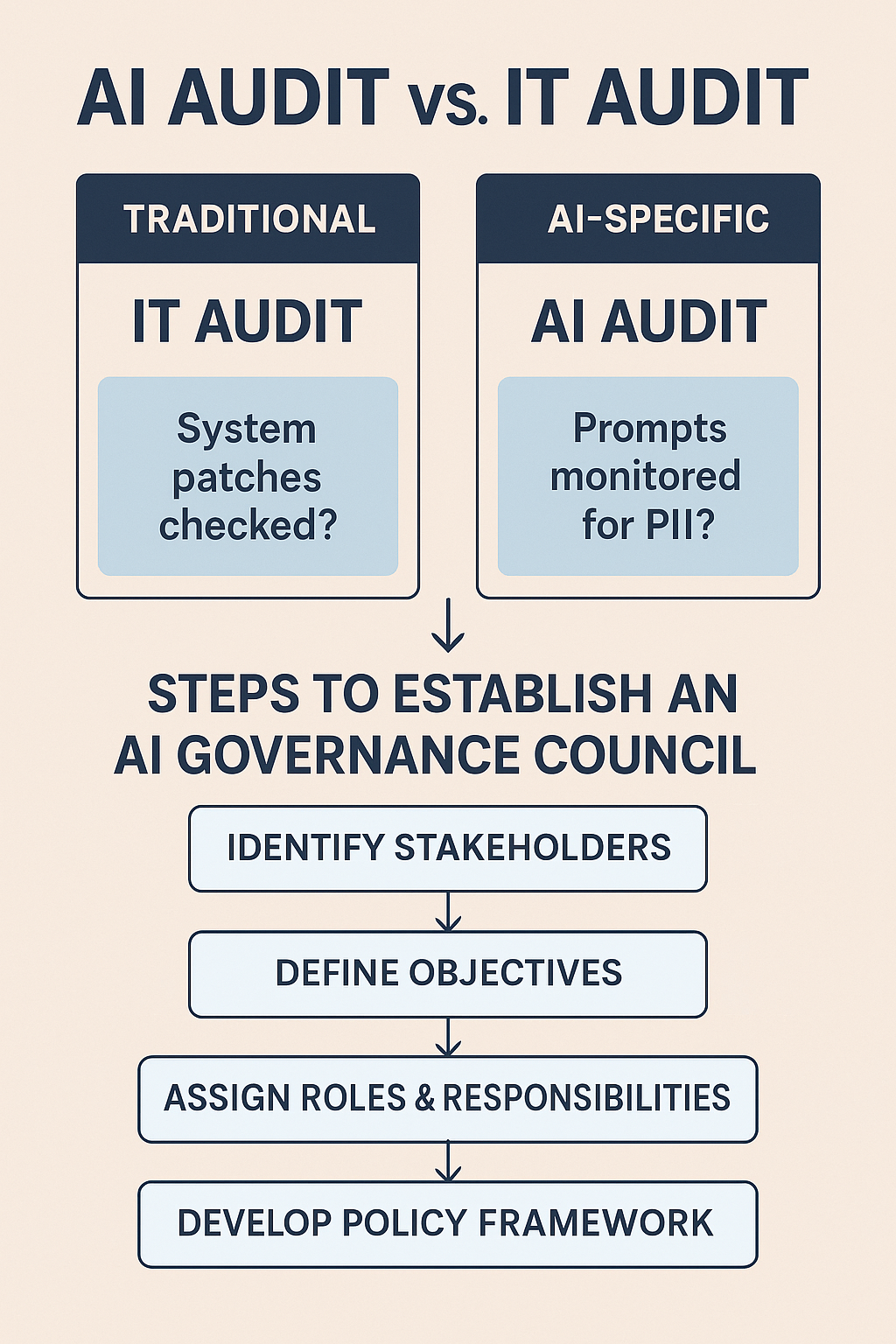

What Traditional IT Audits Miss in an AI-Driven World

Traditional audits focus on:

- Are users authenticated securely?

- Are systems patched and updated?

- Are logs retained for compliance?

While foundational, these checks are inadequate for AI-specific risks. Here’s what they often miss:

1. Prompt Injection and Contextual Leakage

Malicious inputs can trick generative AI into revealing sensitive data or ignoring safeguards.

In 2023, researchers showed how a ChatGPT-based bot could be manipulated with a prompt like “Ignore previous instructions” to leak confidential data.

Without prompt logging or monitoring, organizations may unintentionally expose PII or IP.

2. Data Training and Intellectual Property (IP) Risk

Employees pasting sensitive data into AI tools (e.g., ChatGPT, Gemini) may unknowingly feed it into vendor training datasets.

OpenAI’s default settings allow inputs to be used to improve models unless explicitly opted out.

Audits often ignore prompt histories and vendor data retention terms.

3. Model Drift and Bias

AI models evolve over time. Fine-tuning or shifting input patterns can cause model drift, resulting in biased or inaccurate outputs.

A customer support chatbot might begin prioritizing certain demographics if trained on skewed data—yet many companies don’t track model versions or behavior over time.

4. Undocumented Shadow AI Integrations

Low-code and SaaS tools increasingly embed AI by default—think Microsoft 365 Copilot or Salesforce Einstein.

Without an AI asset inventory, these “black box” features operate unchecked. (See our full article on Shadow AI vs. Sanctioned AI).”

Real-World Failures Show the Stakes

Ignoring the AI audit gap isn’t hypothetical. Here are two recent cases that made headlines:

Samsung Data Leak (2023):

Employees pasted confidential source code into ChatGPT, unintentionally exposing internal IP. Samsung responded by banning generative AI tools.Source: Reuters

Air Canada Chatbot Lawsuit (2024):

An AI-powered chatbot gave incorrect legal advice, resulting in a lawsuit. The court held Air Canada accountable for its AI’s behavior.Source: CBC News

These incidents could have been mitigated with input monitoring, output validation, and vendor oversight.

Regulatory Pressure Adds Urgency

Global regulations are closing in on AI governance:

- EU AI Act (2024): Classifies certain AI systems as high-risk, with strict transparency and compliance mandates.

- GDPR: Applies to AI handling personal data, with fines up to €20 million or 4% of global revenue.

- HIPAA/SEC/FDA: Industry-specific rules add complexity for healthcare, finance, and regulated sectors.

Without AI-aware audits, organizations risk non-compliance, fines, and lost trust.

Bridging the AI Audit Gap: What You Can Do Now

To stay ahead, organizations must evolve from traditional IT audits to AI-specific governance. Here’s how:

1. Update Audit Checklists with AI Criteria

Start asking:

Are all AI tools inventoried and approved?

Are data inputs monitored for PII or IP leakage?

Are prompts, outputs, and interactions logged?

2. Adopt Emerging Frameworks

Use standards designed for AI governance:

NIST AI Risk Management Framework (transparency, accountability, mapping risks)

ISO/IEC 42001 (AI Management Systems)

These help mitigate bias, ensure oversight, and meet compliance expectations.

3. Collaborate Across Functions

AI is not just an IT issue. Involve:

- Legal – Review vendor contracts for data/IP usage.

- Compliance – Ensure alignment with GDPR, HIPAA, etc.

- Security – Implement DLP, access control, and activity logging.

- Business Units – Track adoption patterns and tool usage.

Form an AI Governance Council to meet quarterly and align efforts.

4. Define Guardrails for Shadow AI

Draft clear usage policies for employee interaction with external AI tools.

Tip: Designate an internal AI risk owner to oversee vendor terms and scale policy adoption gradually.

5. Support Small Organizations

Even without enterprise budgets, small teams can:

- Use free NIST templates for AI risk reviews.

- Conduct quarterly inventories using IT asset tools.

- Train staff on safe AI use and prompt hygiene.

Final Thoughts

AI is transforming business—but are you ready to manage its risks?

The gap between adoption and oversight is growing. By treating AI as a transformational capability (not just another SaaS tool), you can reduce risk, build trust, and maintain a competitive edge. (See our full article on AI in the Workplace: Boosting Productivity or Replacing Jobs?

✅ Next Steps:

- Run a quarterly AI tool inventory.

- Adopt NIST or ISO/IEC 42001 to govern usage.

- Educate teams and review vendors proactively.

📣 Don’t wait for a breach or lawsuit to act—prioritize AI governance today.

“For a comprehensive AI risk roadmap, see our AI Risk Management Guide.”

Samsung Data Leak (2023):

Samsung Data Leak (2023): Air Canada Chatbot Lawsuit (2024):

Air Canada Chatbot Lawsuit (2024): Are all AI tools inventoried and approved?

Are all AI tools inventoried and approved?